| |||||||||

|

Code Walkthrough

The following is an overview of how the ONC RPC is implemented in the Linux 2.4.4 kernel. This walkthrough is impartial; performance analysis and potential improvements will be discussed separately.

Data Structures

I will begin my explaining some of they key data structures.

struct rpc_task Each of these structs contain all the information about a particular RPC. This includes what the command is, the command parameters, information about the connection, etc. When I refer to an RPC in the context of the Linux code, I will actually be referring to an rpc_task struct.

schedq queue When an rpc is ready to resume execution after waiting on another queue, it is placed on the schedq queue. From here, the rpciod kernel thread will remove it from the queue and resume execution of the rpc.

backlog queue Each connection has a backlog queue associated with it. When there are more requests than the RPC layer can send at a time, new requests are placed on the backlog. Each time a request is completed, a request will be taken off of the backlog, and placed on the schedq.

pending queue Each connection has a pending queue. After an rpc has been sent to the server, it is placed on the pending queue to wait for a response. Whenever a reply is received from the server, the corresponding rpc is taken off of the pending queue and placed on the schedq.

The Finite State Machine

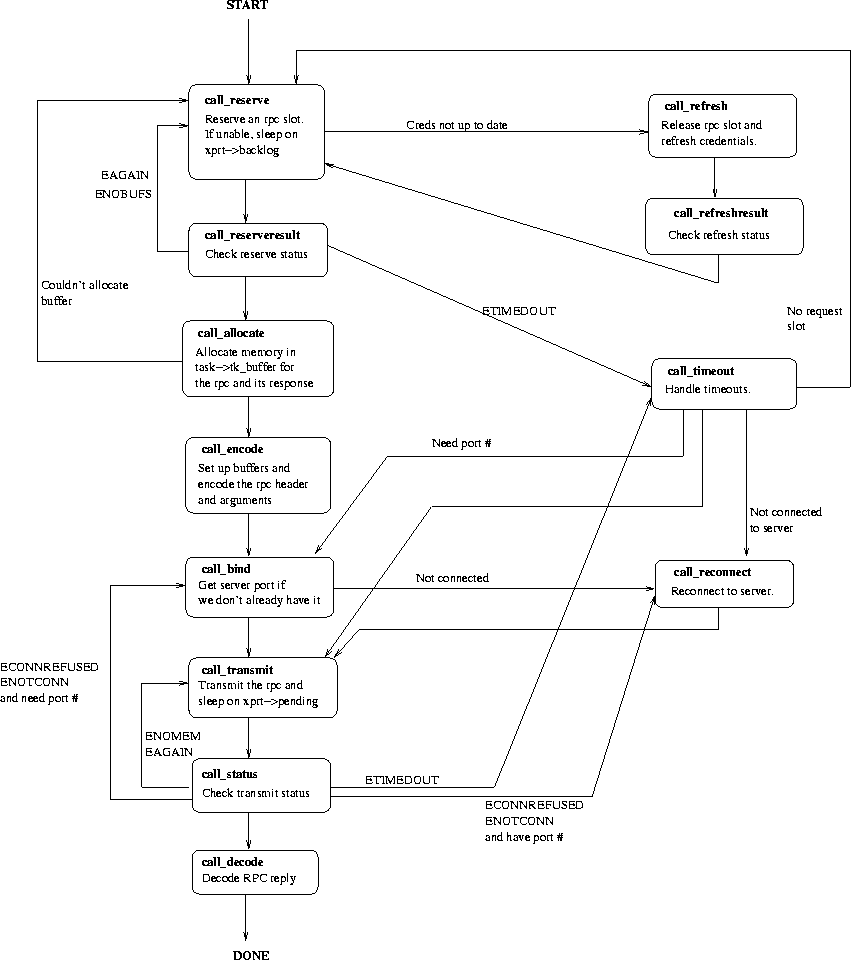

The heart of the RPC layer is a finite state machine. When an rpc is executed, __rpc_execute runs in an infinite loop. In each iteration of the loop, it calls the function pointer in task->tk_action. This function pointer will be one of the call_* functions in net/sunrpc/clnt.c; each of these functions can be thought of as a state in the state machine.

Below is a diagram of the state machine. The normal execution path goes straight down the left side of the diagram.

Synchronous vs Asynchronous RPCs

An rpc can be executed in either synchronous or asynchronous mode. The calling code simply sets a flag in the rpc_task struct before calling rpc_execute if it wishes the rpc to be executed asynchronously.

For synchronous RPCs, rpc_execute does not return until the RPC has completed its execution.

For asynchronous RPCs, as soon as the rpc has to wait for something, rpc_execute returns. The rest of the execution will be handled by the rpciod thread. When the RPC has finished, a callback function provided by the original caller will be invoked.

Congestion Control

Congestion control prevents the client from overloading the server. When a tcp connection is used to communicate with the server, congestion control is handled in the TCP layer, and can be ignored in the RPC. However, when UDP is used, it is the RPC layer's duty to provide congestion control.

The following variables are used in congestion control:

cong: Congestion. This is a measure of how congested the server currently is. Specifically, it is the number of RPCs currently being executed, multiplied by RPC_CWNDSCALE. RPC_CWNDSCALE is set to 256 in the 2.4.4 kernel.

cwnd: The congestion window. When congestion equals or exceeds this value, any new requests will be put on the backlog. cwnd is initialized to RPC_CWNDSCALE, and modified dynamically, as will be explained below.

congtime: This is the time at which cwnd may be incremented. Each time cwnd is modified, congtime is set to a new value. More on this below.

Each time an RPC is completed successfully, cwnd will be incremented if the following conditions are met:

- cong is greater than or equal to cwnd. A successful RPC when cong is less than cwnd does not tell us anything about whether cwnd ought to be increased.

- current time is greater than congtime. This prevents cwnd from growing too quickly.

When these conditions are met, cwnd and congtime are set by:

cwnd += (RPC_CWNDSCALE * RPC_CWNDSCALE + (cwnd/2)) / cwnd

congtime = jiffies + HZ*4 * (cwnd/RPC_CWNDSCALE)

jiffies is the current time in jiffies, and HZ is the number of jiffies per second. So, if the current cwnd is 512, congtime will be set such that cwnd will not be incremented again for 8 seconds. Notice that as cwnd increases, it is not allowed to increment as often, nor by as much.

When a timeout occurs, it is a sign that too much is being

sent to the server at once, and cwnd must be decreased.

cwnd and congtime are set by:

cwnd = cwnd / 2

congtime = jiffies + HZ*8 * (cwnd/RPC_CWNDSCALE)

Summary

That sums up how the RPC is implemented in the 2.4.4 kernel. I am currently working on evaluating the RPCs performance, and will hopefully be making some improvements.

|

|