| |||||||||

|

UPDATE:I've obtained new results for our F85 file server using a larger RAID volume. The results are below.

I took some simple measurements of read and write performance in order to get an idea of how well NFS is currently performing. It will also be useful to measure how modifications affect overall performance.

The tests were done using the Sun Connectathon basic5 test. The write test creates a new file on an NFS partition, copies data from memory into the file, and then closes it. The read test simply reads the data back in.

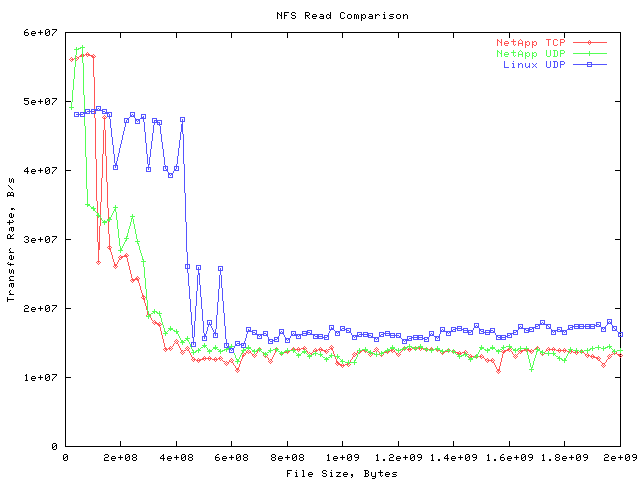

Because I am interested in the performance of the RPC layer, I wanted to ensure that all the tests involved network activity. Specifically, on the read tests, I didn't want the read to be done out of the client's cache. To accomplish this, before each read test I had the server touch the file. This forces the client to invalidate it's cached copy and actually read the data from the server.

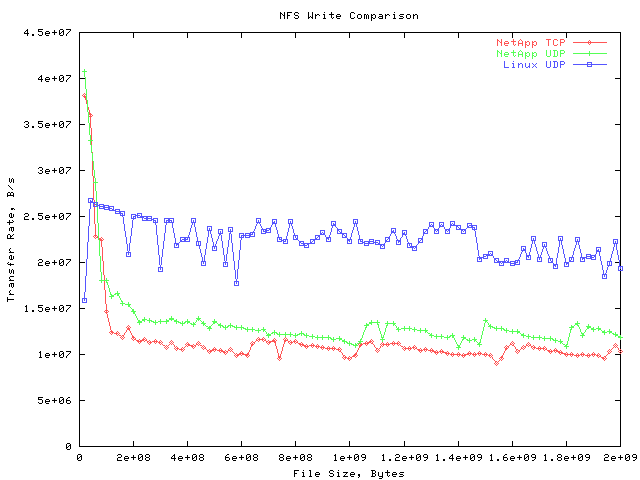

I performed three versions of the test. One version writes to our Network Appliance F85 file server, with an NFS partition mounted using UDP. Another test uses the same server, but the partition is mounted using TCP. The last test uses a Linux server, mounted with UDP. The Linux NFS server does not currently support TCP.

I thought that the first point on the Linux UDP test was rather odd, so I ran another test on a 20 MB file and got 26e6 B/s. This matches the points near it.

Performance of the Network Appliance server is rather poor compared to that of the Linux server. This may be because the Linux client is tuned to work with the Linux server. (See my analysis using rpcstat for a partial explanation of the F85's poor performance).

Performance on the F85 drops rapidly at roughly 60 MB. This is probably due to the F85's 64 MB of NVRAM. For files under 64 MB, the server does not need to immediately write the file to disk for it to be committed. However, anything over 64 MB must be written to disk.

It's also interesting that the test using TCP does not perform as well as the test using UDP. This may be because TCP has a higher network overhead than UDP.

Again, the Linux server gives the best performance, although not by as wide a margin as in the write test. The TCP and UDP tests against the F85 were roughly equal.

The F85's performance gradually dropped as the file size approached 400 MB. In contrast, the Linux server's performance remained fairly steady until it hit 420 MB, after which it dropped suddenly. I am not yet sure why performance drops at nearly the same point in both cases.

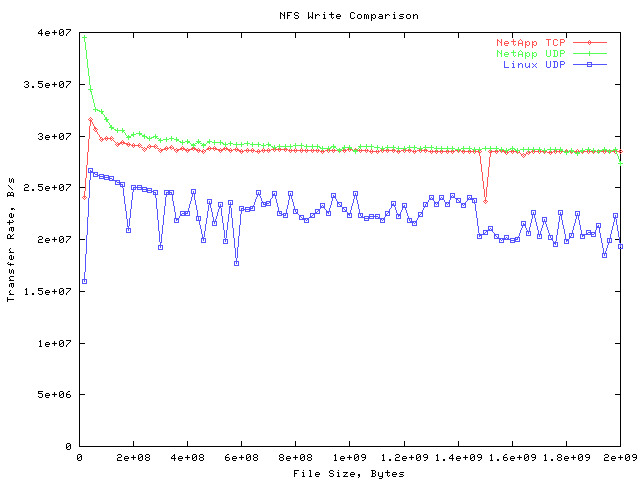

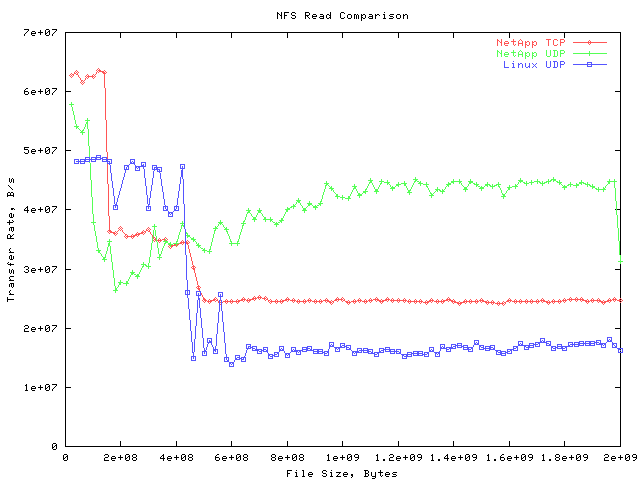

New Results

While doing testing with my rpcstat tool, I found that Plymouth's performance was suffering due to using a 2 disk RAID volume. Since then, I took new measurements of Plymouth's read and write performance using a larger volume, with much better results.

The F85 now outperforms the Linux server by a wide margin. UDP performance is still slightly better than TCP performance.

The performance of the F85 drops at around 180 MB. The Linux server performs better until the file size reaches about 400 MB, at which point the Linux server's performance drops. I hypothesize that the better performance for small files is due to caching effects on the server. I believe I eliminated caching effects on the client; also if the cache were on the client, the drop-offs should be at the same point for both servers.

The F85 server experiences a second drop at 400 MB. I'm not sure what would cause this.

For large files, the F85 using UDP is the clear winner. It is expected that the F85 should have better performance than the Linux server at this point, since performance is bound by disk throughput, and the F85 is using a large RAID array. However, I'm not sure why UDP performs so much better than TCP.

|

|